Today, let’s attempt something new: deploying Juniper vMX software on a bare-metal server running the CentOS 7 system for a lab experiment.

You can find the related architecture and explanations on the Juniper Knowledge Base (Click here). Allow me to guide you through the general installation steps.

1. Preparation Work

Here are the recommended hardware specifications for deployment:

BIOS Settings:

vT-x & vT-d on

Hyperthreading on

OS: CentOS 7 Latest ( Please download it from the official site )

locale: en_US.UTF-8

Kernel version: 3.10.0-327.el7.x86_64

CPU Cores: Intel CPU, Ivy bridge or higher ( AMD CPU not yet tested )

RAM: 8 GiB for Lite-mode ( Lab experiments ), 32 ~ 64 GiB for Riot-mode ( Production environment )

Network Card: Intel x520 ( IXGBE 2*10Gbps ) or Intel X710 ( I40E 4*10Gbps ) / XL710 ( I40E 2*40Gbps )You may choose between Firewalld or iptables for netfilter manipulation based on your situation. As this is an experimental environment, I opted for iptables and opened up all the ports.

If you’re deploying this in a production environment, please formulate rules according to the specific circumstances.

Initially, we need to downgrade the kernel to the required version. vMX has stringent requirements for the kernel version. Deploying the router on an unsupported version of the kernel will inhibit its startup.

The kernel files can be found HERE:

After downloading the file, utilize the “yum” command for installation, and initiate the boot process with the required version.

Ensure you’ve selected the correct version.

grub2-set-default "CentOS Linux (3.10.0-327.el7.x86_64) 7 (Core)"

grub2-editenv list

saved_entry=CentOS Linux (3.10.0-327.el7.x86_64) 7 (Core)Next, switch to the specific locale and disable SELinux as well.

sudo localectl set-locale LANG=en_US.utf8

setenforce 0

cat >//etc/selinux/config<<MAVE

SELINUX=disabled

SELINUXTYPE=targeted

MAVEThe following commands will disable SELinux on your server and set the locale to en_US.UTF-8. This configuration should satisfy the deployment requirements.

Upon completion, we can commence the installation of the required packages. Please install the packages listed below. You can directly copy and paste the commands onto your server:

yum -y install epel-release python-pip python-devel python3-pip python3-devel numactl-libs libpciaccess-devel parted-devel yajl-devel libxml2-devel glib2-devel libnl-devel libxslt-devel libyaml-devel numactl-devel redhat-lsb kmod-ixgbe libvirt-daemon-kvm numactl telnet net-tools epel-release

# Basic package

PATH=/opt/rh/python27/root/usr/bin:$PATH

export PATH

pip install netifaces pyyaml

# or

# pip3 install netifaces pyyaml, if it not works

# For startup scripts

yum -y install libhugetlbfs libhugetlbfs-utils bridge-utils

yum groupinstall "virtualization host" && yum groupinstall "virtualization platform"

ln -s /usr/libexec/qemu-kvm /usr/bin/qemu-system-x86_64

# Virtualized environments, in order to run imagesGreat, we’ve finished installing the packages, but hold on, there are a few more steps to optimize the efficiency of running vMX.

Firstly, please append intel_iommu=on default_hugepagesz=1G hugepagesz=1G hugepages=16 after rhgb quiet in /etc/default/grub.

This action enables Hugepages for the forwarding plane, with each page consuming 1GB of memory, adding up to a total of 16GB, and enables IOMMU for SRIOV functions.

If you’re deploying vMX for experimental purposes, you may omit the “HugePage allocation” step. Please remember that IOMMU still needs to be activated; otherwise, the SRIOV function will be inoperable!

root@MAVETECH ~ $ cat /etc/default/grub

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR="$(sed 's, release .*$,,g' /etc/system-release)"

GRUB_DEFAULT=saved

GRUB_DISABLE_SUBMENU=true

GRUB_TERMINAL_OUTPUT="console"

GRUB_CMDLINE_LINUX="crashkernel=auto rd.lvm.lv=centos/root rd.lvm.lv=centos/swap rhgb quiet intel_iommu=on default_hugepagesz=1G hugepagesz=1G hugepages=16"

GRUB_DISABLE_RECOVERY="true"Secondly, rebuild the grub:

grub2-mkconfig -o /boot/grub2/grub.cfgThirdly, disable apicv. Incorporate this command into /etc/modprobe.d/kvm.conf.

options kvm-intel enable_apicv=0Finally, disable the networkmanager and ksm.

systemctl disable NetworkManager --now

systemctl disable ksm --now

systemctl disable ksmtuned --nowExcellent, we’ve completed the pre-installation steps. Please restart the server to effectuate the configuration!

Post reboot, let’s verify the success of our operations:

root@MAVETECH ~ $ cat /sys/kernel/mm/ksm/run

0

# Disable KSM

root@MAVETECH ~ $ cat /proc/meminfo | grep Huge

AnonHugePages: 16021504 kB

HugePages_Total: 16

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 1048576 kB

# Enable Hugepages

root@MAVETECH ~ $ dmesg | grep iommu

[ 0.000000] Command line: BOOT_IMAGE=/vmlinuz-3.10.0-327.el7.x86_64 root=/dev/mapper/centos-root ro crashkernel=auto rd.lvm.lv=centos/root rd.lvm.lv=centos/swap rhgb quiet intel_iommu=on default_hugepagesz=1G hugepagesz=1G hugepages=16

[ 0.000000] Kernel command line: BOOT_IMAGE=/vmlinuz-3.10.0-327.el7.x86_64 root=/dev/mapper/centos-root ro crashkernel=auto rd.lvm.lv=centos/root rd.lvm.lv=centos/swap rhgb quiet intel_iommu=on default_hugepagesz=1G hugepagesz=1G hugepages=16

# Ensure IOMMU is functional - it's needed for SRIOV VFs

root@MAVETECH ~ $ lsmod | grep kvm

kvm_intel 162153 40

kvm 525259 1 kvm_intel

# Load necessary modulesEverything appears to be in order =) Let’s proceed to the next segment – the vMX deployment.

2. Deployment of vMX

Now, let’s commence the deployment of vMX.

Before the deployment, you need to liaise with your Juniper Sales representative to obtain a copy of the file and acquire a license key (if it’s intended for a production environment). After procuring the file, unzip it and place it in the /home directory.

Before running the startup script, we need to adjust the configuration file located at /home/vmx/config/vmx.conf to suit our particular requirements. Feel free to customize your own file based on my example.

##############################################################

#

# vmx.conf

# Config file for vmx on the hypervisor.

# Uses YAML syntax.

# Leave a space after ":" to specify the parameter value.

#

##############################################################

---

#Configuration on the host side - management interface, VM images etc.

HOST:

identifier : vmx1 # Maximum 6 characters

host-management-interface : em3

routing-engine-image : "/home/vmx/images/junos-vmx-x86-64-xxxxxxxx.qcow2"

routing-engine-hdd : "/home/vmx/images/vmxhdd.img"

forwarding-engine-image : "/home/vmx/images/vFPC-xxxxxxxx.img"

# Don't forget to verify the image path

---

#External bridge configuration

BRIDGES:

- type : external

name : br-ext # Max 10 characters

stp : off # off / on

# No modifications necessary in this section, keep default values

---

#vRE VM parameters

CONTROL_PLANE:

vcpus : 4

memory-mb : 16384

console_port: 8601

interfaces :

- type : static

ipaddr : 10.102.144.94

macaddr : "0A:00:DD:C0:DE:0E"

---

#vPFE VM parameters

FORWARDING_PLANE:

memory-mb : 16384

vcpus : 32

console_port: 8602

device-type : sriov

# If a virtio interface has been assigned, use "mixed" instead

interfaces :

- type : static

ipaddr : 10.102.144.98

macaddr : "0A:00:DD:C0:DE:10"

#Resource allocation.

#--Control plane:--

#Assign 1 core in lite-mode, 4 cores in riot-mode

#--Forwarding plane:--

#Assign 4 cores in lite-mode, 16 cores in riot-mode (the higher, the better. Max 32 cores)

#mac-addresses no need to modify, keep the default

---

#Interfaces

#Please note that the "nic" here needs to be filled with the parent nic name of the VF.

#such as p3p1, not the VF name (p3p1_0).

JUNOS_DEVICES:

- interface : et-0/0/0

type : sriov

mtu : 9216

port-speed-mbps : 40000

nic : p3p1

virtual-function : 0

mac-address : "32:90:57:0f:2c:00"

description : "et-0/0/0 interface"

- interface : et-0/0/1

type : sriov

mtu : 9216

port-speed-mbps : 40000

nic : p3p2

virtual-function : 0

mac-address : "32:90:57:0f:2d:00"

description : "et-0/0/1 interface"Well done! We’re now ready to launch vMX.

root@MAVETECH ~ $ cd /home/vmx

root@MAVETECH ~ $ ./vmx.sh -lv --install

#When using it for the first time, please use the --install argument. Afterwards, you only need to use --start

#---output log begin---

{..snip..}

VMX Bringup Completed

==================================================

Check if br-ext is created........................[Created]

Check if br-int-vmx1 is created...................[Created]

Check if VM vcp-vmx1 is running...................[Running]

Check if VM vfp-vmx1 is running...................[Running]

Check if tap interface vfp-ext-vmx1 exists........[OK]

Check if tap interface vfp-int-vmx1 exists........[OK]

Check if tap interface vcp-ext-vmx1 exists........[OK]

Check if tap interface vcp-int-vmx1 exists........[OK]

==================================================

VMX Status Verification Completed.

==================================================

Log file........................................../home/vmx/build/vmx1/logs/vmx_xxxxxxxx.log

==================================================

Thank you for using VMX

==================================================

{..snip..}

#--output log over---

root@MAVETECH ~ $ ./vmx --console vcp vmx1

--

Login Console Port For vcp-vmx1 - 8601

Press Ctrl-] to exit anytime

--

Trying ::1...

telnet: connect to address ::1: Connection refused

Trying 127.0.0.1...

Connected to localhost.

Escape character is '^]'.

FreeBSD/amd64 (MAVE-VMX-LAB) (ttyu0)

login: Before moving onto the verification phase, we must perform some initial configuration to ensure its proper functioning:

root@MAVE-VMX-LAB> show configuration chassis | display set

set chassis aggregated-devices ethernet device-count 4

set chassis fpc 0 pic 0 interface-type et

set chassis fpc 0 pic 0 port 0 speed 40g

set chassis fpc 0 pic 0 port 1 speed 40g

# Force interfaces to operate at 40Gbps

set chassis fpc 0 performance-mode

set chassis alarm management-ethernet link-down ignore

set chassis network-services enhanced-ip

# Choose mode: enhanced-ip, in preparation for upcoming experiments

root@MAVE-VMX-LAB> show configuration interfaces et-0/0/0 | display set

set interfaces et-0/0/0 description "[pci@0000:03:02.0 - p3p1] - Connected to Upstream Switch"

set interfaces et-0/0/0 flexible-vlan-tagging

set interfaces et-0/0/0 vlan-offload

set interfaces et-0/0/0 mtu 9216

set interfaces et-0/0/0 unit 0 vlan-id 6

set interfaces et-0/0/0 unit 0 family inet address 10.0.0.2/24

# After committing the configuration, don't forget to execute "request chassis fpc restart slot 0"3. Verifying Operational Status

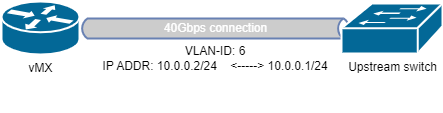

vMX has successfully initiated startup, which looks rather impressive. The interface p3p1 has connected to the upstream switch, and the upstream is configured with a sub-interface, VLAN-ID 6 , IP address 10.0.0.1/24 .

Now, let’s test its availability!

root@MAVE-VMX-LAB> show interfaces terse et-0/0/0

Interface Admin Link Proto Local Remote

et-0/0/0 up up

et-0/0/0.0 up up inet 10.0.0.2/24

{..snip..}

root@MAVE-VMX-LAB> ping 10.0.0.1 count 2

PING 10.0.0.1 (10.0.0.1): 56 data bytes

64 bytes from 10.0.0.1: icmp_seq=0 ttl=64 time=47.585 ms

64 bytes from 10.0.0.1: icmp_seq=1 ttl=64 time=0.727 ms

--- 10.0.0.1 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max/stddev = 0.727/24.156/47.585/23.429 msEverything appears to be functioning normally; vMX can now communicate with the upstream, signifying the completion of the deployment!

Thank you for reading. If there’s anything you don’t understand or need clarification on, don’t hesitate to drop a comment. I’ll be happy to assist.